Initial configuration for Scrubbing and Deep Scrubbing in a Ceph cluster

| 🌐 This document is available in both English and Ukrainian. Use the language toggle in the top right corner to switch between versions. |

After installing the Platform on an OKD cluster, it is recommended to configure the scrubbing and deep scrubbing processes in the Ceph cluster to ensure data integrity and system stability.

|

These settings are intended for production environments and should be applied immediately after Platform installation. |

1. Overview

Scrubbing and deep scrubbing are background processes in Ceph that verify data and detect corrupted or inconsistent objects:

-

Scrubbing checks checksums within Placement Groups (PG) and detects metadata issues.

-

Deep scrubbing performs a deeper check by comparing actual object data with their hashes.

-

Scrubbing runs automatically, but you can configure it manually to optimize performance.

2. Why scrubbing matters

Scrubbing can create a load that affects cluster performance. To minimize this:

-

Schedule regular scrubbing during off-peak hours.

-

Run deep scrubbing manually or during defined low-load windows.

-

Limit resource usage to avoid performance degradation.

3. Recommended initial settings

After deploying the Platform on the OKD cluster, complete the following recommended actions in the Ceph cluster:

-

① Configure general scrubbing parameters.

-

② Disable automatic deep scrubbing.

-

③ Schedule regular scrubbing.

Each step is explained below.

3.1. Configuring general scrubbing parameters

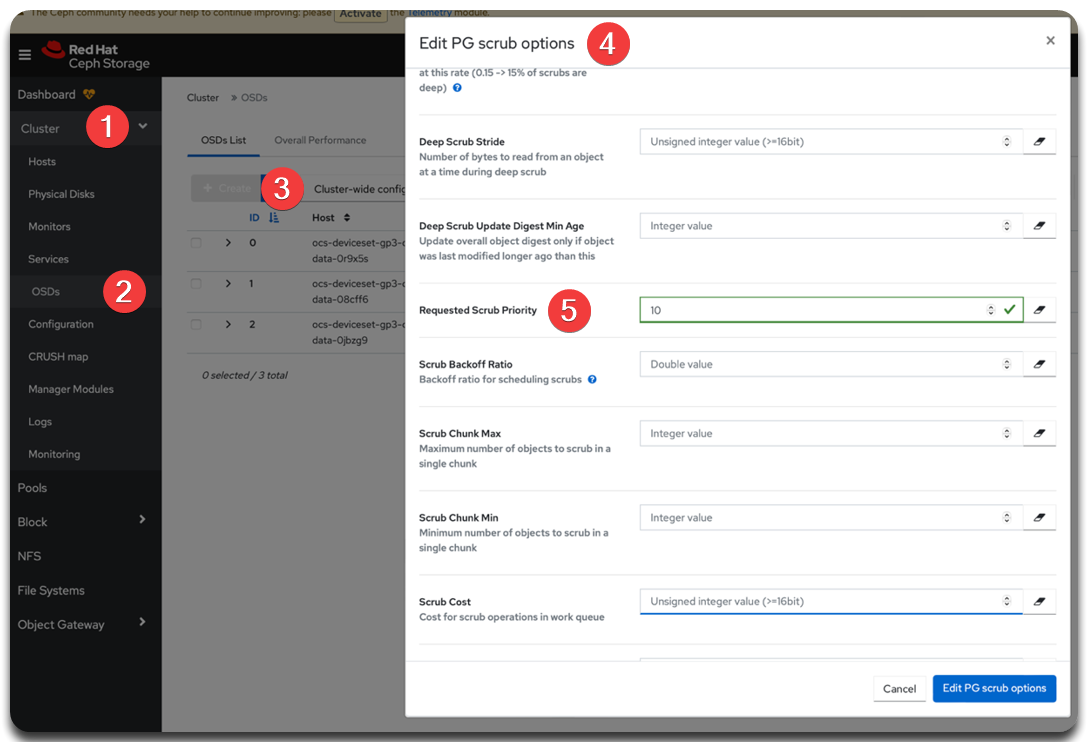

3.1.1. Setting scrubbing process priority

Lower the priority of manual scrubbing to ensure user requests are always prioritized. For example, set the value to 10.

osd_requested_scrub_priority 10To set this value in the Ceph Dashboard UI:

-

Open Ceph Dashboard UI.

-

Go to .

-

Select PG scrub and expand the Advanced section.

-

Set the value for Requested Scrub Priority.

Figure 1. Ceph Dashboard UI: Requested Scrub Priority

Figure 1. Ceph Dashboard UI: Requested Scrub Priority

Or use Ceph CLI from the rook-operator pod in the openshift-storage project:

ceph config --conf=/var/lib/rook/openshift-storage/openshift-storage.config set osd osd_requested_scrub_priority 103.1.2. Configuring concurrent scrubbing processes

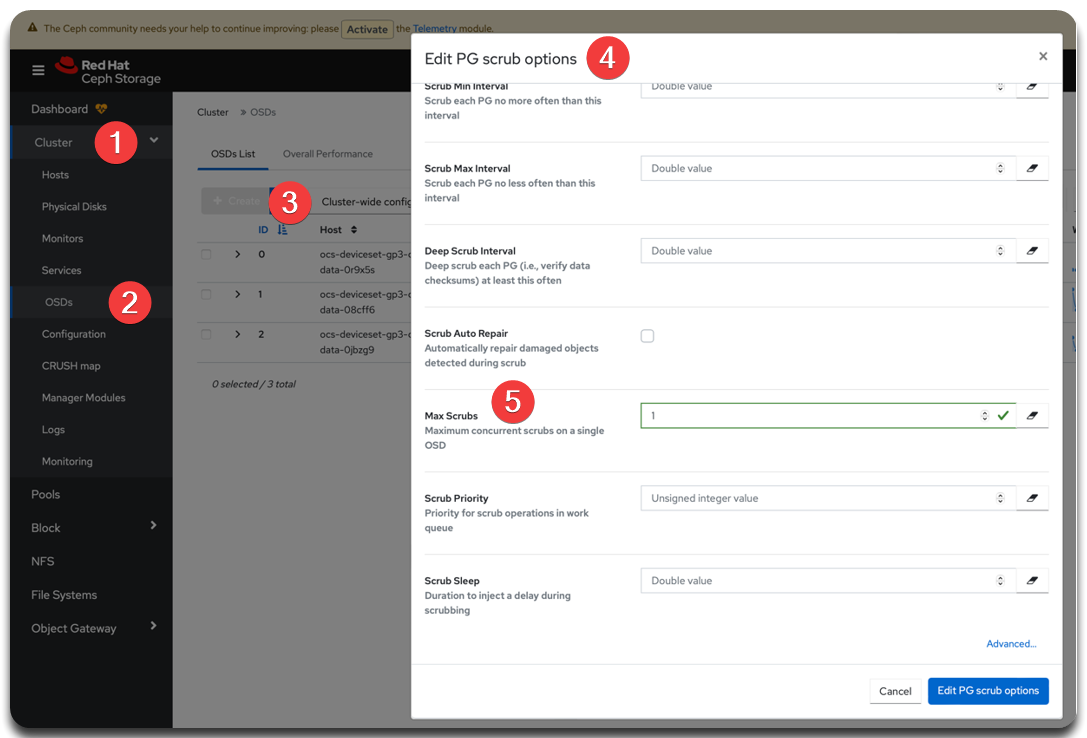

Allow only one scrubbing process per OSD by setting osd_max_scrubs = 1.

This parameter defines how many concurrent scrubbing processes can run on a single OSD. This helps to:

-

reduce disk and CPU load;

-

prevent degraded performance for client requests;

-

ensure cluster stability in production environments.

| OSD (Object Storage Daemon) is a key Ceph component that stores data, processes read/write requests, and performs scrubbing. Each OSD works independently, and data integrity checks (scrubs) are local operations. |

|

If your cluster contains a large number of PGs (Placement Groups) that have missed scrubbing ("overdue"), you may temporarily increase This will speed up overdue scrubbing but may increase the load. ❗ Revert to ➡️ See PG states: Placement Group States. |

To change the value in the Ceph Dashboard UI:

-

Open the Ceph Dashboard UI.

-

Navigate to .

-

Select PG scrub and set Max Scrubs.

Figure 2. Ceph Dashboard UI: Max Scrubs

Figure 2. Ceph Dashboard UI: Max Scrubs

Or use Ceph CLI from the rook-operator pod in the openshift-storage project:

ceph config --conf=/var/lib/rook/openshift-storage/openshift-storage.config set osd osd_max_scrubs 13.2. Disabling automatic deep scrubbing

Ceph runs deep scrubbing automatically by default. It ensures data integrity by checking actual object content but uses more resources than regular scrubbing because it checks object contents, not just metadata.

|

In production, run deep scrubbing manually during maintenance windows or low-load periods. This prevents:

Learn more: Manual deep scrubbing. |

3.2.1. How to disable automatic deep scrubbing

To block automatic deep scrubbing, apply the nodeep-scrub flag at the pool level:

-

Locate the

ceph.conffile path used by your cluster (example below specifies it explicitly). -

Run this command to apply the

nodeep-scrubflag to all pools:for pool in $(ceph --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd pool ls); do ceph --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd pool set "$pool" nodeep-scrub true doneThe osd pool lscommand lists all pools in the cluster. The loop applies thenodeep-scrubflag to each.

3.2.2. How to verify the flag is set

Check that nodeep-scrub is set:

ceph --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd pool ls detailLook for flags in the output; it should include nodeep-scrub.

|

❗ Run deep scrubbing manually at least once every few weeks, depending on your SLA and data criticality. Schedule it during low-load periods — e.g., weeknights or weekends. No formal maintenance window is required if there’s no impact on critical services. 📌 Avoid PG backlog, where Placement Groups go unchecked for too long.

In large clusters, run daily with limited concurrency ( |

3.3. Configuring the regular scrubbing schedule

Run scrubbing nightly from 12 am to 6 am UTC, limiting to one concurrent process per OSD (osd_max_scrubs = 1). This mode allows regular integrity checks with minimal cluster impact (see Configuring concurrent scrubbing processes).

Scrubbing is enabled by default. Use UTC time to schedule it precisely in your environment.

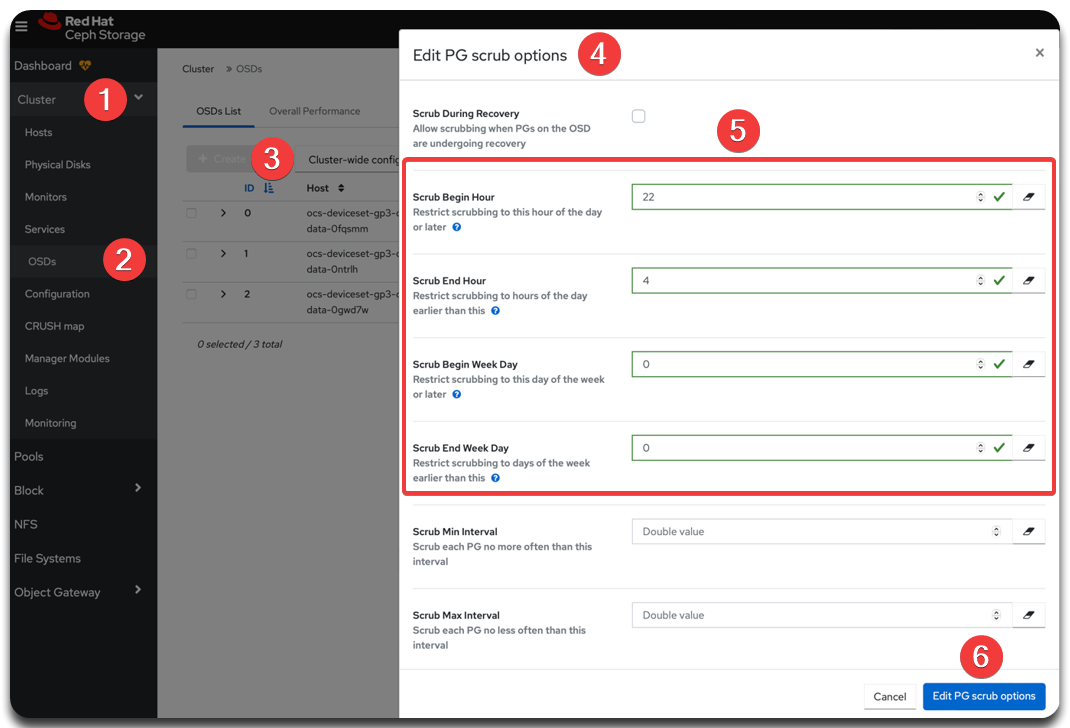

3.3.1. How to configure the schedule via Ceph Dashboard

-

Open the Ceph Dashboard UI.

-

Navigate to .

-

Select the PG scrub section and configure the parameters listed below.

These parameters define the exact time window to run automatic scrubbing. Values are in UTC. Adjust for local time (e.g., Kyiv — UTC+2/3). Table 1. Ceph Dashboard UI: PG scrub schedule settings Parameter Value Description osd_scrub_begin_hour22Start hour (UTC) of the scrubbing window.

22 UTC = 00:00 (12 am) Kyiv time. Scrubbing starts no earlier than midnight local time.

osd_scrub_end_hour4End hour of the scrubbing window (UTC).

4 UTC = 06:00 Kyiv time. All auto scrubbing stops after 6 am.

osd_scrub_begin_week_day0Start day of the scrubbing window.

0= Sunday (Ceph day numbering:0= Sunday, …,6= Saturday). Ifbeginandendare both0, scrubbing is allowed daily.osd_scrub_end_week_day0End day of the scrubbing window.

Also

0= Sunday. The settingbegin_week_day = end_week_day = 0enables scrubbing every day.📌 Ceph weekday logic:

-

begin_week_day = 0,end_week_day = 0means "Sunday through Sunday". All days are allowed. -

Set

begin_week_day = 1,end_week_day = 5to restrict scrubbing to weekdays.

-

-

Click Edit PG scrub options to apply changes.

Figure 3. Ceph Dashboard UI: PG scrub schedule

Figure 3. Ceph Dashboard UI: PG scrub schedule

3.3.2. How to configure the schedule via Ceph CLI

You can also use Ceph CLI from the rook-operator pod in the openshift-storage project. Run these commands:

-

Set the scrub start and end times (UTC).

ceph config set osd --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd_scrub_begin_hour 22 ceph config set osd --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd_scrub_end_hour 4 -

Set the weekday range (0 = Sunday; allow daily runs).

ceph config set osd --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd_scrub_begin_week_day 0 ceph config set osd --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd_scrub_end_week_day 0

-

Check the applied values (replace

<parameter>accordingly):ceph config get osd --conf=/var/lib/rook/openshift-storage/openshift-storage.config <parameter>Example: Check scrub start hourceph config get osd --conf=/var/lib/rook/openshift-storage/openshift-storage.config osd_scrub_begin_hour