Extending Ceph storage

This guide describes the procedure of increasing the disk space of the Ceph storage platform on the Openshift cluster (OKD 4.x).

| Increasing the disk space may be part of a scheduled upgrade or something you need to do when storage reaches its 85% capacity. |

1. Prerequisites

A Platform administrator must have access to the cluster with the cluster-admin role.

2. Procedure

-

Expand the root volumes at the cloud provider level (we will use AWS as an example). To do this, perform these steps:

-

Go to the OKD web console.

-

Go to

openshift-storagenamespace. -

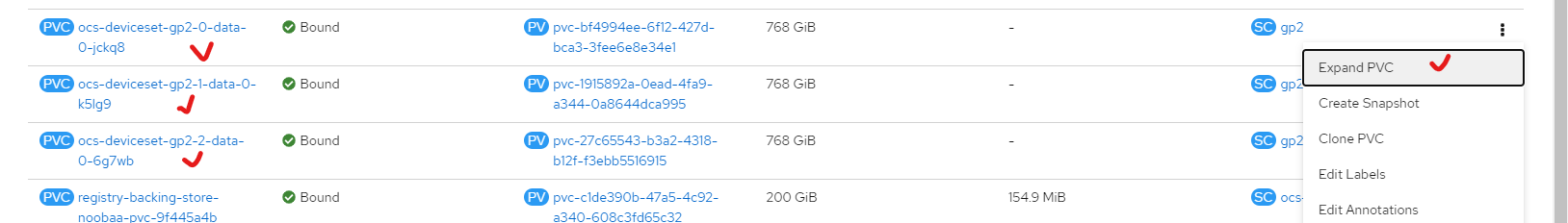

Open the Persistent Volume Claims section and select the

Expand PVCoption from the context menu for these three volumes:-

ocs-deviceset-gp2-0-data-0-xxx -

ocs-deviceset-gp2-1-data-0-xxx -

ocs-deviceset-gp2-2-data-0-xxx

-

-

Specify the size for these volumes.

-

-

Modify the following custom resources (CRs):

For details, refer to OKD documentation: Managing resources from Custom Resource Definitions. -

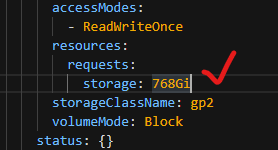

Find

ocs-storagecluster(an instance of thestoragecluster.ocs.openshift.ioCRD). -

Find the

.yamlconfiguration file and change the value of thestorageparameter tostorage: 768Gi, which was initially set at the stage of expanding the root volumes (see step 1).

Alternatively, you can change this value using a command-line interface (CLI):

oc patch...

-

-

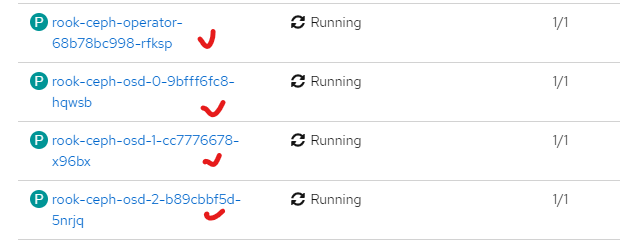

In the

openshift-storagenamespace, restart the necessary pods:

Alternatively, restart all pods in this namespace.

For details on working with pods in Openshift, refer to the Origin Kubernetes Distribution (OKD) documentation: Using pods.

After all the automatic Ceph cluster procedures that follow are done, the disk space will be extended to the size you specified.

| If the Ceph disk space does not expand after step 3 and Ceph stops working, perform a force restart of the instances in the Ceph MachineSet of the Openshift cluster. |